What is gensim?

- Popular open-source NLP library

- Uses top academic models to perform complex tasks

- Building document or word vectors

- Performing topic identification and document comparison

A word embedding or vector is trained from a larger corpus and is a multi-dimensional representation of a word or document.

For example in this graphic we can see that the vector operation king minus queen is approximately equal to man minus woman. Or that Spain is to Madrid as Italy is to Rome

Gensim allows you to build corpora and dictionaries using simple classes and functions. A corpus (or if plural, corpora) is a set of texts used to help perform NLP tasks.

Let’s continue by example,

!!pip install -U gensim from gensim.corpora.dictionary import Dictionary from nltk.tokenize import word_tokenize

my_documents = ['The movie was about a spaceship and aliens.', 'I really liked the movie!', 'Awesome action scenes, but boring characters.', 'The movie was awful! I hate alien films.', 'Space is cool! I liked the movie.', 'More space films, please!',]

Preprocessing steps. For better results, we would want to apply more of preprocessing such as removing punctuation and stop words.

tokenized_docs = [word_tokenize(doc.lower()) ...: for doc in my_documents]This will create a mapping with an id for each token

dictionary = Dictionary(tokenized_docs)

We can take a look at the tokens and their ids by looking at the token2id attribute, which is a dictionary of all of our tokens and their respective ids in our new dictionary.

dictionary.token2id

Creating a gensim corpus

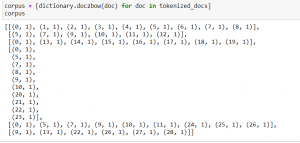

corpus = [dictionary.doc2bow(doc) for doc in tokenized_docs]

corpus

Here we can see that the Gensim corpus is a list of lists, each list item representing one document.

gensimmodels can be easily saved, updated, and reused- Our dictionary can also be updated

- This more advanced and feature rich bag-of-words can be used in future exercises

See you in the next article..

Introduction to Natural Language Processing in Python – (Simple text preprocessing)

IT Tutorial IT Tutorial | Oracle DBA | SQL Server, Goldengate, Exadata, Big Data, Data ScienceTutorial

IT Tutorial IT Tutorial | Oracle DBA | SQL Server, Goldengate, Exadata, Big Data, Data ScienceTutorial