Exadata X8M-2 Image Upgrade Operation Step By Step For Image Version 20.1.9 With Best Practices

SUBJECTS 1-) Introduction

What Is Exadata X8M?

New in Exadata X8M-2

What's New in Oracle Exadata Database Machine 20.1.0

Exadata System Software Update 20.1.9.0.0

2-) Step by Step Image Upgrade Operations on X8M-2 Multi-rack Exadata System

Current Environment

How it works?

Preparation Steps to Patch

Healthcheck of Exadata

Advices

Patch Implementation Strategy Best Practices

Prechk Steps

Prechk Compute Node

Prechk Cell Node

Prechk Switches

Patch Steps

Patch Compute Node

Patch Cell Node

Patch Switches

3-) Consequently 4-) Referances

1-) Introduction

What Is Exadata X8M? - Exadata X8M is the latest generation Exadata Database Machine that provides in-memory performance for both OLTP and Analytics with capacity, sharing, and cost benefits of shared storage. - Exadata X8M introduces three new technologies: - Native Persistent Memory - New RDMA over Converged Ethernet (RoCE) Network Fabric - Ultra-Fast RDMA to Persistent Memory Access - Exadata X8M-2 and 20.1.9 image version new feature topics are explained detaily. https://ittutorial.org/exadata-x8m-2-multi-rack-introduction/

New in Exadata X8M-2

What's New in Oracle Exadata Database Machine 20.1.0 The following features are new for Oracle Exadata System Software 20.1.0: - Exadata Secure RDMA Fabric Isolation - Smart Flash Log Write-Back - Fast In-Memory Columnar Cache Creation - Cell-to-Cell Rebalance Preserves PMEM Cache Population - Control Persistent Memory Usage for Specific Databases - Application Server Update for Management Server

Exadata System Software Update 20.1.9.0.0 - Exadata System Software 20.1.9.0.0 Update is now generally available. 20.1.9.0.0 is a maintenance release that adds critical bug fixes and security fixes on top of 20.1.X releases.

2-) Step by Step Image Patch Operations on X8M-2 Multirack Exadata System

Current Environment 2 full X8M-2 (16 compute nodes , 28 cell storage , 4 leaf 2 spine switches ) Exist Image Version=19.3.10 Next Image Version=20.1.9 How it works? We will patch apply in order of dbnodes,cellnodes,switches on X8m-2 hardware multi-rack system. Roce switch upgrade will be done instead of infiniband switch.

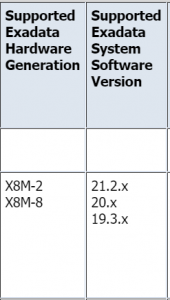

Preparation Steps to Patch Exadata System Software and Hardware Versions Supported Document 1626579.1 |

Healthcheck of Exadata #Exachk applying --> Download and install: MOS 1070954.1 cd /opt/oracle.ahf export RAT_ORACLE_HOME=/u01/app/oacle/product/19.0.0.0/dbhome_1 ./exachk -a -o v #You can analyze exachk.html #You must execute only first node. #Exadata healthcheck with dcli dcli -g /root/dbs_group -l root uptime dcli -g /root/cell_group -l root uptime dcli -g /root/roce_group -l root uptime #dcli -g /root/ibs_group -l root uptime dcli -l root -g /root/dbs_group dbmcli -e "list alerthistory attributes name,beginTime,alertMessage where alerttype=stateful and endtime=null" dcli -l root -g /root/cell_group cellcli -e "list alerthistory attributes name,beginTime,alertMessage where alerttype=stateful and endtime=null" dcli -l root -g /root/all_group "hostname -I" dcli -l root -g /root/all_group uptime dcli -l root -g /root/all_group "/opt/oracle.cellos/ipconf -verify" dcli -l root -g /root/all_group "ipmitool sunoem version" dcli -l root -g /root/all_group "/usr/bin/ipmitool sunoem cli 'show -script /SP/network'" | grep ipaddress | egrep -v "pendingipaddress" dcli -l root -g /root/all_group "/usr/bin/ipmitool sunoem cli 'show -script /SP/clock'" | grep timezone dcli -l root -g /root/all_group "ibstatus" dcli -l root -g /root/dbs_group "dbmcli -e LIST DBSERVER" #dcli -l root -g /root/dbs_group "systemctl status dbserverd.service " dcli -l root -g /root/cell_group cellcli -e "list cell attributes flashcachemode" dcli -l root -g /root/cell_group cellcli -e "list cell attributes pmamcachemode" dcli -l root -g /root/cell_group “systemctl list-unit-files celld.service” | grep celld #dcli -l root -g /root/cell_group chkconfig –list celld dcli -l root -g /root/cell_group "cellcli -e list griddisk attributes name,status,asmmodestatus,asmdeactivationoutcome" dcli -l root -g cell_group "cellcli -e list physicaldisk" dcli -l root -g /root/all_group "imagehistory" dcli -g /root/all_group -l root “df –Ph” | grep ”100%” #dcli -g /root/ibs_group -l root “df –Ph” | grep ”100%” dcli -g /root/roce_group -l root “df –Ph” | grep ”100%” dcli -l root -g /root/cell_group cellcli -e “list quarantine” dcli -l root -g /root/all_group "logrotate /etc/logrotate.d/syslog" dcli -l root -g /root/cell_group imageinfo | grep 'Active image version' dcli -l root -g /root/cell_group ipmitool sunoem version dcli -l root -g /root/cell_group chkconfig --list celld dcli -l root -g /root/dbs_group imageinfo | grep Kernel dcli -l root -g /root/dbs_group imageinfo | grep 'Image version' dcli -l root -g /root/dbs_group ipmitool sunoem version dcli -l root -g /root/dbs_group "/u01/app/19.0.0.0/grid/bin/crsctl config crs" cat /etc/fstab | grep nfs dcli -l root -g /root/all_group "/opt/oracle.cellos/ipconf.pl -verify -semantic -at-runtime -check-consistency -verbose -nocodes" dcli -l root -g /root/all_group "/opt/oracle.cellos/CheckHWnFWProfile" #Db status srvctl status database -d db_uniq_name -v |

Advices; You should apply exachk. You should control exadata for healthcheck and configurations. You should control exadata alertlogs. You should connect to ilom for all nodes. You should stop apps. You should restart all nodes (dbnode,cellnode). You should connect to nodes directly. You should trace nodes with ilom. You should uninstall conflict rpms. You should uninstall third party security rpms. You should dismount nfs mounts. You should set permitrootlogin to yes on sshd_config file. You should set clientaliveinterval to 86400 on sshd_config file. You should reset ilom for all_group . You should reset password for all_group and switches. You should set ntp for all_group and switches. You can ignore glusterfs rpm errors . You can ignore "No link detected eth1" with "-w". You can ignore "Yum rolling update requires for grid 11.2.0.2 bp12" If your version is not this. You must stop and disable crs before patching You should umount nfs disk. You should disable jobs. You should patch non-rolling. You should backup /etc . Remove zfs infos from fstab file. Restart all dbnodes if there is zfs disk mount problem after ibswitch patch operation Patch manager is executed from first compute node as root. Ssh equivalence for the root user must be configured. Do not monitor the log files in a writable mode. Upgrade progress can be monitored by ILOM console. Order of operation = dbnodepatchone , cellpatchall + dbnodepatchother, cell clean , dbnode clean , crs and db start , switch patch Remove zfs infos from fstab file. Restart all dbnodes if there is zfs disk mount problem after ibswitch patch operation You should restart db nodes if there is ethernet card down problem after switch patching. There should not be many files under /root and /opt Free space should be enough for vgdisplay Operation is offline. It takes an average of 5 hour without problem. You call me for other problem and advices !!! |

Patch Implementation Strategy Best Practices ; 1-) Apply healthcheck of exadata. 2-) Apply dbnodeupdate on compute node 1. (It takes an average of 1 hour) Cell nodes and other compute nodes patches can be applied same time 3-) Apply cellnodesupdate on compute node 1. All cell storages is patched. (It takes an average of 1 hour) 4-) Apply dbnodeupdate on each of the other compute nodes. (It takes an average of 1 hour) 5-) Check updates is completed successfully with compute nodes and cell nodes . 6-) Apply cleanup for cell nodes and compute nodes . This step opens crs and database . (It takes an average of 5-10 minutes) 7-) Apply roceswitchesupdate on compute node 1.It works rolling automatically . Meanwhile already database was open. The database continues to run. (It takes an average of 1 hour) . Apply ibswitchesupdate before X8 8-) Apply healthcheck of exadata. |

Prechk Steps Prechk Compute Node https://docs.oracle.com/en/engineered-systems/exadata-database-machine/dbmmn/updating-exadata-software.html#GUID-EE218087-4BFC-45EB-93BF-077848E416DC #Configure ssh Equivalency dcli -g /root/dbs_group -l root -k #Prechk on every compute node /setup/patch/exa2019/dbpatchmgr/dbnodeupdate.sh -u -v -l /setup/patch/exa2019/dbfolder/p32743628_201000_Linux-x86-64.zip #You should analyze output. Prechk Cell Node https://docs.oracle.com/en/engineered-systems/exadata-database-machine/dbmmn/updating-exadata-software.html#GUID-CA73F012-6D43-46A5-B5AD-2EB8B41C8164 #Configure ssh Equivalency dcli -g /root/cell_group -l root -k #Prechk on first compute node cd /setup/patch/exa2019/cellfolder/ unzip p32853086_201000_Linux-x86-64.zip cd patch* ./patchmgr -cells /root/cell_group -reset_force ./patchmgr -cells /root/cell_group -cleanup ./patchmgr -cells /root/cell_group -patch_check_prereq #You should analyze output. Prechk Switches(Infiniband || Roce) #Roceswitch https://docs.oracle.com/en/engineered-systems/exadata-database-machine/dbmmn/updating-exadata-software.html#GUID-29D4228C-C611-4077-AC2C-E24F42C203B0 #Ibswitch https://docs.oracle.com/en/engineered-systems/exadata-database-machine/dbmmn/updating-exadata-software.html#GUID-C20CAA84-D0C1-4772-86C5-B6C10DDDE3D3 #Prechk on first compute node cd /setup/patch/exa2019/swpatch/ unzip p32743624_201000_Linux-x86-64.zip cd patch* #Configure ssh Equivalency #dcli -g /root/ibs_group -l root -k ./roce_switch_api/setup_switch_ssh_equiv.sh xxxIPxxx,xxxIPxxx #Ibswitch prechk #./patchmgr -ibswitches /root/ibs_group -upgrade -ibswitch_precheck #Roce switch verify & prechk time ./patchmgr --roceswitches /root/roceswitches.lst --verify-config --log_dir /u01/install/scratchpad/ time ./patchmgr --roceswitches /root/roceswitches.lst --upgrade --roceswitch-precheck --log_dir /u01/install/scratchpad/ #You should analyze output. |

Patch Steps Patch Compute Node https://docs.oracle.com/en/engineered-systems/exadata-database-machine/dbmmn/updating-exadata-software.html#GUID-EE218087-4BFC-45EB-93BF-077848E416DC #Stop crs dcli -g /root/dbs_group -l root "$GRID_HOME/bin/crsctl disable crs " dcli -g /root/dbs_group -l root "$GRID_HOME/bin/crsctl stop crs " #Reboot all compute nodes #reboot #Control compute node alerts dcli -l root -g /root/dbs_group dbmcli -e "list alerthistory attributes name,beginTime,alertMessage where alerttype=stateful and endtime=null" | grep -v aide #Patch apply on every compute node with root user /setup/patch/exa2019/dbpatchmgr/dbnodeupdate.sh -u -l /setup/patch/exa2019/dbfolder/p32743628_201000_Linux-x86-64.zip #Control version of compute nodes on first compute node with root user dcli -l root -g /root/dbs_group imageinfo | grep 'Image version' #Post run on every compute node with root user /setup/patch/exa2019/dbpatchmgr/dbnodeupdate.sh –c Patch Cell Node https://docs.oracle.com/en/engineered-systems/exadata-database-machine/dbmmn/updating-exadata-software.html#GUID-CA73F012-6D43-46A5-B5AD-2EB8B41C8164 #Patch apply on first compute node with root user #Stop all cell services dcli -g /root/dbs_group -l root "$GRID_HOME/bin/crsctl disable crs " dcli -g /root/dbs_group -l root "$GRID_HOME/bin/crsctl stop crs " dcli -g /root/cell_group -l root "cellcli -e alter cell shutdown services all" #Control cell node alerts dcli -l root -g /root/cell_group cellcli -e "list alerthistory attributes name,beginTime,alertMessage where alerttype=stateful and endtime=null" | grep -v aide #Monitor cell dcli -g /root/cell_group -l root "uptime" dcli -g /root/cell_group -l root "cellcli -e list physicaldisk" dcli -g /root/cell_group -l root "cellcli -e list celldisk" dcli -g /root/cell_group -l root "cellcli -e list griddisk" dcli -g /root/cell_group -l root "service celld status" dcli -g /root/cell_group -l root “systemctl list-unit-files celld.service” | grep celld #dcli -g /root/cell_group -l root chkconfig –list celld dcli -g /root/cell_group -l root "cellcli -e list griddisk attributes name,status,asmmodestatus,asmdeactivationoutcome" dcli -g dbs_group -l root "$GRID_HOME/bin/crsctl check crs" dcli -g dbs_group -l root "ps -ef | grep grid" dcli -g dbs_group -l root "ps -ef|grep -e crs -e smon -e pmon" #Unzip patch file cd /setup/patch/exa2019/cellfolder/ unzip p32853086_201000_Linux-x86-64.zip cd patch* #Patch cell storage nohup ./patchmgr -cells /root/cell_group -patch & #Post run ./patchmgr -cells /root/cell_group -cleanup #Control version of cell nodes dcli -l root -g /root/cell_group imageinfo | grep 'Active image version' #Start crs dcli -g /root/dbs_group -l root "$GRID_HOME/bin/crsctl enable crs " dcli -g /root/dbs_group -l root "$GRID_HOME/bin/crsctl start crs " #List griddisk status dcli -g /root/cell_group -l root "cellcli -e list griddisk attributes name,status,asmmodestatus,asmdeactivationoutcome" Patch Switches(infiniband or roce) #Roceswitch https://docs.oracle.com/en/engineered-systems/exadata-database-machine/dbmmn/updating-exadata-software.html#GUID-29D4228C-C611-4077-AC2C-E24F42C203B0 #Ibswitch https://docs.oracle.com/en/engineered-systems/exadata-database-machine/dbmmn/updating-exadata-software.html#GUID-C20CAA84-D0C1-4772-86C5-B6C10DDDE3D3 #Restart ibs_group in order #Every one takes 5-10 min #reboot #Ibswitch prechk cd /setup/patch/exa2019/swpatch/ cd patch* ./patchmgr -ibswitches /root/ibs_group -upgrade -ibswitch_precheck #Patch apply on first compute node with root user #Subnet master should be first in the ibs group list. #Subnet manager determines patch order for switches . You can run switches. getmaster -l cd /setup/patch/exa2019/swpatch/ unzip p32743624_201000_Linux-x86-64.zip cd patch* #Ibswitch every one takes 15-20 min #nohup ./patchmgr -ibswitches /root/ibs_group –upgrade & #Ibswitches view version #dcli -l root -g /root/ibs_group version #dcli -l root -g /root/ibs_group uptime #Roce switch #Rocewitches every one takes 15-20 min time ./patchmgr --roceswitches /root/roceswitches_leaf.lst -- upgrade --log_dir /u01/install/scratchpad/ time ./patchmgr --roceswitches /root/roceswitches_spine.lst --upgrade --log_dir /u01/install/scratchpad/ #Ssh for roceswitches and view version dcli -l root -g /root/roce_group "show version" dcli -l root -g /root/roce_group uptime |

3-) Consequently

In this article, We have patched compute node, cell storage and roce switches step by step. Operation was done for 20.1.9 image version in X8M-2 Exadata.

4-) Referances

IT Tutorial IT Tutorial | Oracle DBA | SQL Server, Goldengate, Exadata, Big Data, Data ScienceTutorial

IT Tutorial IT Tutorial | Oracle DBA | SQL Server, Goldengate, Exadata, Big Data, Data ScienceTutorial

Exadata general master node = Doc ID 888828.1

Image version 20.1.9 files;

Exadata general master node = Doc ID 888828.1

Image version 20.1.9 files;