Hi,

I will continue to explain how to Upgrade Grid Infrastructure ( GI ) from Oracle 12cR2 to Oracle 18c in Full Rack ( Eight Nodes ) Oracle Exadata X7-2 in this article.

Read and apply first article before this article. Because you cannot upgrade Grid Infrastructure without applying that steps.

https://ittutorial.org/18c-upgrade-oracle-grid-infrastructure-upgrade-from-oracle-12c-to-oracle-18c-in-full-rack-oracle-exadata-x7-steps-1-patch-27006180-failed-24600431-patch-rollback/

If you have completed steps in the first article, you can start upgrade of Oracle Grid Infrastructure Upgrade from Oracle 12cR2 to Oracle 18c

You can download Oracle 18c installation file from Metalink and following link.

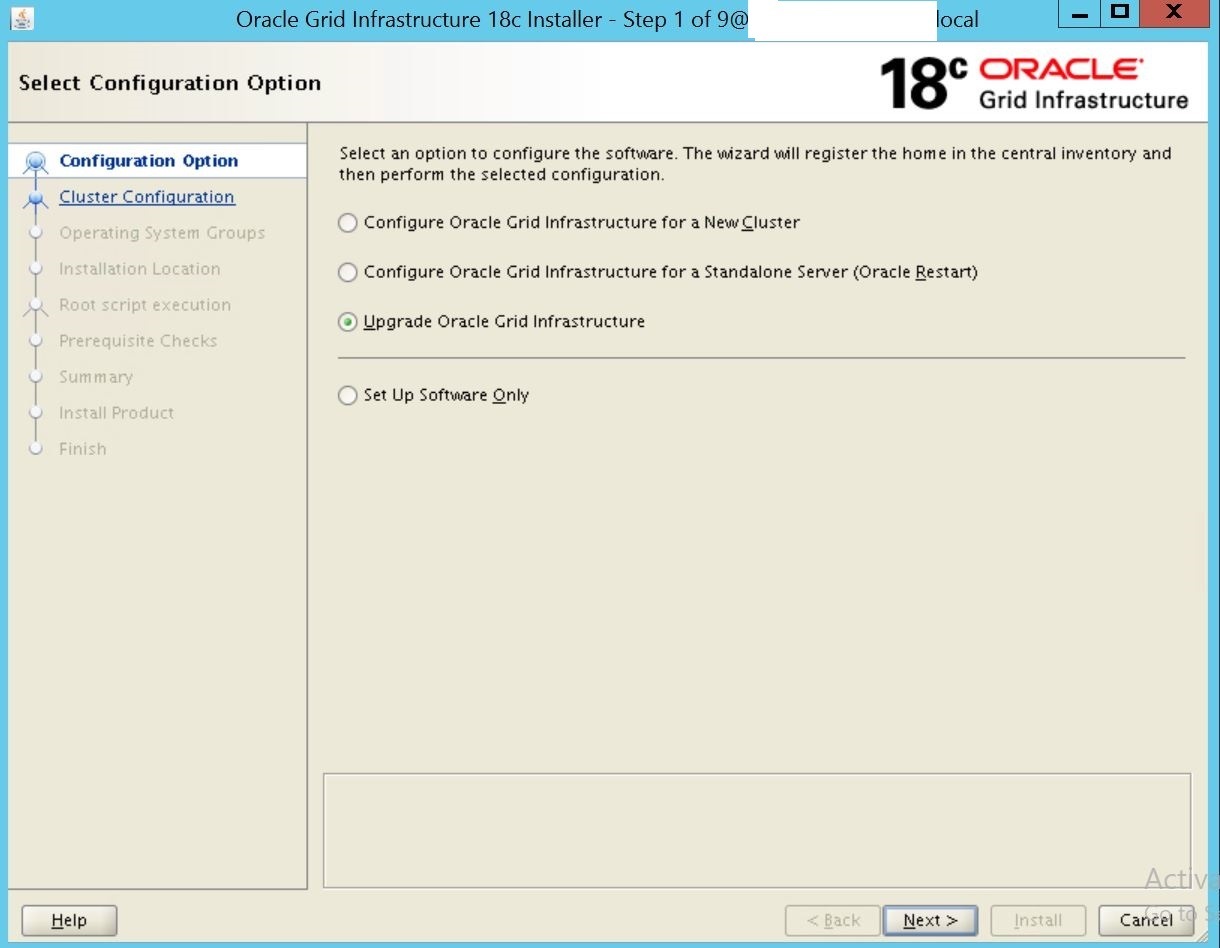

Download Grid Infrastructure software and upload into Exadata. Unzip files and run sh gridSetup.sh file to start Upgrade.

Upgrade Oracle Grid Infrastructure is selected automatically. Click Next.

Select the Cluster nodes in this step. We have 8 nodes, so I have chosen them as following.

Click SSH Connectivity and do manual SSH Connectivity between nodes if it doesn’t exist.

I have setup SSH connectivity between nodes, so I can continue.

You can register Oracle GI 18c with Enterprise manager Cloud Control.

Type Oracle base in this step.

You can select Automatically run configuration scripts.

Verifying Installation.

We have performed prerequirement steps in the first article, so There is no error in this step. Click submit and start Upgrade.

Upgrade is started.

In this step, you should run rootupgrade.sh on all nodes.

rootupgrade.sh is run like following. Firstly run it at the first node, then other nodes.

[root@MehmetSalih01 u01]# [root@MehmetSalih01 u01]# /u01/grid/rootupgrade.sh Performing root user operation. The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /u01/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: The contents of "dbhome" have not changed. No need to overwrite. The file "oraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: y Copying oraenv to /usr/local/bin ... The file "coraenv" already exists in /usr/local/bin. Overwrite it? (y/n) [n]: y Copying coraenv to /usr/local/bin ... Entries will be added to the /etc/oratab file as needed by Database Configuration Assistant when a database is created Finished running generic part of root script. Now product-specific root actions will be performed. Relinking oracle with rac_on option Using configuration parameter file: /u01/grid/crs/install/crsconfig_params The log of current session can be found at: /u01/app/grid/crsdata/MehmetSalih01/crsconfig/rootcrs_MehmetSalih01_2019-08-02_06-13-09PM.log 2019/08/02 18:13:45 CLSRSC-595: Executing upgrade step 1 of 19: 'UpgradeTFA'. 2019/08/02 18:13:45 CLSRSC-4015: Performing install or upgrade action for Oracle Trace File Analyzer (TFA) Collector. 2019/08/02 18:14:49 CLSRSC-4003: Successfully patched Oracle Trace File Analyzer (TFA) Collector. 2019/08/02 18:14:49 CLSRSC-595: Executing upgrade step 2 of 19: 'ValidateEnv'. 2019/08/02 18:14:58 CLSRSC-595: Executing upgrade step 3 of 19: 'GetOldConfig'. 2019/08/02 18:14:58 CLSRSC-464: Starting retrieval of the cluster configuration data 2019/08/02 18:15:14 CLSRSC-692: Checking whether CRS entities are ready for upgrade. This operation may take a few minutes. 2019/08/02 18:16:31 CLSRSC-693: CRS entities validation completed successfully. 2019/08/02 18:16:39 CLSRSC-515: Starting OCR manual backup. 2019/08/02 18:16:55 CLSRSC-516: OCR manual backup successful. 2019/08/02 18:17:05 CLSRSC-486: At this stage of upgrade, the OCR has changed. Any attempt to downgrade the cluster after this point will require a complete cluster outage to restore the OCR. 2019/08/02 18:17:05 CLSRSC-541: To downgrade the cluster: 1. All nodes that have been upgraded must be downgraded. 2019/08/02 18:17:05 CLSRSC-542: 2. Before downgrading the last node, the Grid Infrastructure stack on all other cluster nodes must be down. 2019/08/02 18:17:05 CLSRSC-615: 3. The last node to downgrade cannot be a Leaf node. 2019/08/02 18:17:20 CLSRSC-465: Retrieval of the cluster configuration data has successfully completed. 2019/08/02 18:17:20 CLSRSC-595: Executing upgrade step 4 of 19: 'GenSiteGUIDs'. 2019/08/02 18:17:29 CLSRSC-595: Executing upgrade step 5 of 19: 'UpgPrechecks'. 2019/08/02 18:17:49 CLSRSC-595: Executing upgrade step 6 of 19: 'SaveParamFile'. 2019/08/02 18:18:05 CLSRSC-595: Executing upgrade step 7 of 19: 'SetupOSD'. 2019/08/02 18:18:05 CLSRSC-595: Executing upgrade step 8 of 19: 'PreUpgrade'. 2019/08/02 18:19:04 CLSRSC-468: Setting Oracle Clusterware and ASM to rolling migration mode 2019/08/02 18:19:04 CLSRSC-482: Running command: '/u01/app/12.2.0.1/grid/bin/crsctl start rollingupgrade 18.0.0.0.0' CRS-1131: The cluster was successfully set to rolling upgrade mode. 2019/08/02 18:19:07 CLSRSC-482: Running command: '/u01/grid/bin/asmca -silent -upgradeNodeASM -nonRolling false -oldCRSHome /u01/app/12.2.0.1/grid -oldCRSVersion 12.2.0.1.0 -firstNode true -startRolling false ' ASM configuration upgraded in local node successfully. 2019/08/02 18:19:12 CLSRSC-469: Successfully set Oracle Clusterware and ASM to rolling migration mode 2019/08/02 18:19:21 CLSRSC-466: Starting shutdown of the current Oracle Grid Infrastructure stack 2019/08/02 18:20:09 CLSRSC-467: Shutdown of the current Oracle Grid Infrastructure stack has successfully completed. 2019/08/02 18:20:53 CLSRSC-595: Executing upgrade step 9 of 19: 'CheckCRSConfig'. 2019/08/02 18:20:54 CLSRSC-595: Executing upgrade step 10 of 19: 'UpgradeOLR'. 2019/08/02 18:21:12 CLSRSC-595: Executing upgrade step 11 of 19: 'ConfigCHMOS'. 2019/08/02 18:21:12 CLSRSC-595: Executing upgrade step 12 of 19: 'UpgradeAFD'. 2019/08/02 18:21:27 CLSRSC-595: Executing upgrade step 13 of 19: 'createOHASD'. 2019/08/02 18:21:43 CLSRSC-595: Executing upgrade step 14 of 19: 'ConfigOHASD'. 2019/08/02 18:21:43 CLSRSC-329: Replacing Clusterware entries in file 'oracle-ohasd.conf' 2019/08/02 18:22:33 CLSRSC-595: Executing upgrade step 15 of 19: 'InstallACFS'. CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'MehmetSalih01' CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'MehmetSalih01' has completed CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Oracle High Availability Services has been started. 2019/08/02 18:23:12 CLSRSC-595: Executing upgrade step 16 of 19: 'InstallKA'. 2019/08/02 18:23:42 CLSRSC-595: Executing upgrade step 17 of 19: 'UpgradeCluster'. CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'MehmetSalih01' CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'MehmetSalih01' has completed CRS-4133: Oracle High Availability Services has been stopped. CRS-4123: Starting Oracle High Availability Services-managed resources CRS-2672: Attempting to start 'ora.mdnsd' on 'MehmetSalih01' CRS-2672: Attempting to start 'ora.evmd' on 'MehmetSalih01' CRS-2676: Start of 'ora.mdnsd' on 'MehmetSalih01' succeeded CRS-2676: Start of 'ora.evmd' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.gpnpd' on 'MehmetSalih01' CRS-2676: Start of 'ora.gpnpd' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.gipcd' on 'MehmetSalih01' CRS-2676: Start of 'ora.gipcd' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.crf' on 'MehmetSalih01' CRS-2672: Attempting to start 'ora.cssdmonitor' on 'MehmetSalih01' CRS-2676: Start of 'ora.cssdmonitor' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.cssd' on 'MehmetSalih01' CRS-2672: Attempting to start 'ora.diskmon' on 'MehmetSalih01' CRS-2676: Start of 'ora.crf' on 'MehmetSalih01' succeeded CRS-2676: Start of 'ora.diskmon' on 'MehmetSalih01' succeeded CRS-2676: Start of 'ora.cssd' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.ctssd' on 'MehmetSalih01' CRS-2676: Start of 'ora.ctssd' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.asm' on 'MehmetSalih01' CRS-2676: Start of 'ora.asm' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.storage' on 'MehmetSalih01' CRS-2676: Start of 'ora.storage' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.crsd' on 'MehmetSalih01' CRS-2676: Start of 'ora.crsd' on 'MehmetSalih01' succeeded CRS-6023: Starting Oracle Cluster Ready Services-managed resources CRS-6017: Processing resource auto-start for servers: MehmetSalih01 CRS-2673: Attempting to stop 'ora.MehmetSalih01.vip' on 'MehmetSalih03' CRS-2672: Attempting to start 'ora.ons' on 'MehmetSalih01' CRS-2677: Stop of 'ora.MehmetSalih01.vip' on 'MehmetSalih03' succeeded CRS-2672: Attempting to start 'ora.MehmetSalih01.vip' on 'MehmetSalih01' CRS-2676: Start of 'ora.MehmetSalih01.vip' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.LISTENER.lsnr' on 'MehmetSalih01' CRS-2676: Start of 'ora.LISTENER.lsnr' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.asm' on 'MehmetSalih01' CRS-2676: Start of 'ora.ons' on 'MehmetSalih01' succeeded CRS-2676: Start of 'ora.asm' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.DATA.dg' on 'MehmetSalih01' CRS-2672: Attempting to start 'ora.proxy_advm' on 'MehmetSalih01' CRS-2672: Attempting to start 'ora.RECO.dg' on 'MehmetSalih01' CRS-2676: Start of 'ora.DATA.dg' on 'MehmetSalih01' succeeded CRS-2676: Start of 'ora.RECO.dg' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.devecidb.db' on 'MehmetSalih01' CRS-2676: Start of 'ora.proxy_advm' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.RECO.ACFS_VOL1.advm' on 'MehmetSalih01' CRS-2676: Start of 'ora.RECO.ACFS_VOL1.advm' on 'MehmetSalih01' succeeded CRS-2672: Attempting to start 'ora.reco.acfs_vol1.acfs' on 'MehmetSalih01' CRS-2676: Start of 'ora.reco.acfs_vol1.acfs' on 'MehmetSalih01' succeeded CRS-5017: The resource action "ora.devecidb.db start" encountered the following error: ORA-03113: end-of-file on communication channel Process ID: 303838 Session ID: 328 Serial number: 49209 . For details refer to "(:CLSN00107:)" in "/u01/app/grid/diag/crs/MehmetSalih01/crs/trace/crsd_oraagent_oracle.trc". CRS-2674: Start of 'ora.devecidb.db' on 'MehmetSalih01' failed CRS-2672: Attempting to start 'ora.devecidb.db' on 'MehmetSalih01' CRS-5017: The resource action "ora.devecidb.db start" encountered the following error: ORA-03113: end-of-file on communication channel Process ID: 313185 Session ID: 92 Serial number: 17239 . For details refer to "(:CLSN00107:)" in "/u01/app/grid/diag/crs/MehmetSalih01/crs/trace/crsd_oraagent_oracle.trc". CRS-2674: Start of 'ora.devecidb.db' on 'MehmetSalih01' failed ===== Summary of resource auto-start failures follows ===== CRS-2807: Resource 'ora.devecidb.db' failed to start automatically. CRS-6016: Resource auto-start has completed for server MehmetSalih01 CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources CRS-4123: Oracle High Availability Services has been started. 2019/08/02 18:25:25 CLSRSC-343: Successfully started Oracle Clusterware stack clscfg: EXISTING configuration version 5 detected. clscfg: version 5 is 12c Release 2. Successfully taken the backup of node specific configuration in OCR. Successfully accumulated necessary OCR keys. Creating OCR keys for user 'root', privgrp 'root'.. Operation successful. 2019/08/02 18:25:49 CLSRSC-595: Executing upgrade step 18 of 19: 'UpgradeNode'. 2019/08/02 18:25:57 CLSRSC-474: Initiating upgrade of resource types 2019/08/02 18:26:21 CLSRSC-475: Upgrade of resource types successfully initiated. 2019/08/02 18:26:49 CLSRSC-595: Executing upgrade step 19 of 19: 'PostUpgrade'. 2019/08/02 18:26:59 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded [root@MehmetSalih01 u01]#

You can get following error, so you need to run above command on related Nodes.

Upgrade task is going on healthy.

Oracle 18c Grid Infrastructure Upgrade is completed like following.

Do you want to learn Oracle Database Performance Tuning detailed, then read the following articles.

https://ittutorial.org/oracle-database-performance-tuning-tutorial-12-what-is-the-automatic-sql-tuning-and-how-to-automated-sql-tuning/

IT Tutorial IT Tutorial | Oracle DBA | SQL Server, Goldengate, Exadata, Big Data, Data ScienceTutorial

IT Tutorial IT Tutorial | Oracle DBA | SQL Server, Goldengate, Exadata, Big Data, Data ScienceTutorial